ARC 326 - Create a Serverless Image Processing Pipeline

Prerequisites

In order to complete this lab, you will need the following:

- An AWS account and an IAM user with admin access.

- Sign into the AWS Console using your IAM credentials.

- An SSH Client. OSX: Terminal, Windows: Putty

- An EC2 Key Pair in your account. For more information on generating a key pair, click here

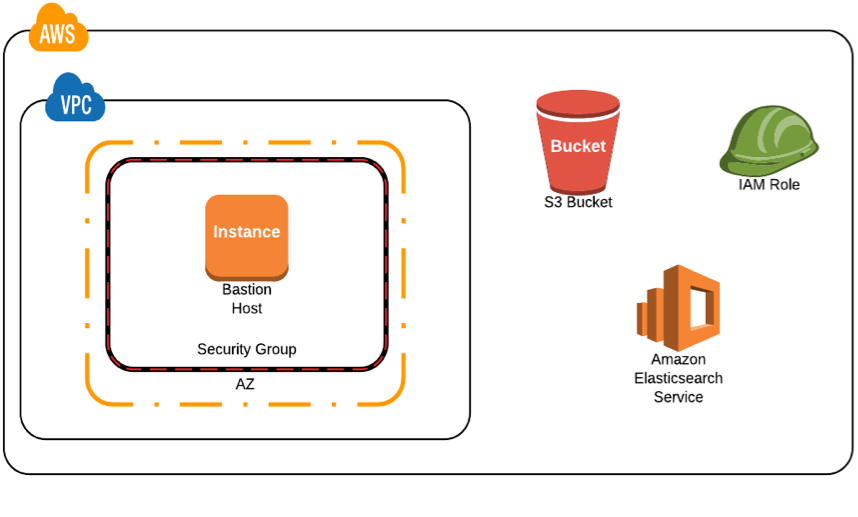

Resources Created

This workshop will create resources in the us-east-1 (N. Virginia) region in a custom VPC. It is strongly suggested that you use a non-production account to create the resources. This lab will create a new VPC, 1 Instance, and an Amazon Elasticsearch Service cluster. This lab can be run concurrently in the same account, but aware that you may run into account limits.

A note on Extra Credit

Oftentimes labs can be point and click without knowing why you are doing things. The extra credit sections are there to offer ideas of ways to extend the solution, but intentionally don't give step by step instructions. Supporting documentation will be included, but the solutions are the the challenge. These challenges don't fit in an hour-long workshop, but offer a chance to do a deep dive into the platform.Prepare the environment

Lab Settings

Stack Name:

- Click the link below to create the cloud formation stack that prepares your environment for this workshop.

- Select your Key Pair from the Key Name dropdown list.

- On the following page, click 'I acknowledge that AWS CloudFormation might create IAM resources with custom names.'

- 'Create Stack' to create the stack.

- The stack details page will open. Keep this page open to watch the progress of the stack creation.

Capture Cloud Formation Output

After the cloud formation script completes, copy and paste the results here:

- Environment Data:

- Bastion Host IP:

54.156.172.190 - Cognito Identity Pool:

us-east-1:8afea265-732a-4448-a1c7-8119d739a1ce - Cognito User Pool Id:

us-east-1_877wP1DvS - Cognito User Pool Client Id:

4l0cj2d2hn8703n78be4h1gh7p - ElasticSearch Endpoint:

search-arc326l8836search-ywozvbuwaxk7mnhzvdq67wntxu.us-east-1.es.amazonaws.com

Begin the Lab

Deploy the Lambda Function

The lambda function will be deployed from the bastion host. To find the IP address of the Bastion Host, go to the Stack Details page and expand the 'Outputs' section. The bastion host is listed under 'BastionHost' and the IP is to the right of it. For more information on how to SSH into an instance, click here.

ssh -i keyfile.pem ec2-user@54.156.172.190Once you have successfully SSH'd into the host:

- Install the node dependencies:

cd process-image/lambda-function npm install cd .. - Package the template:

This will package the Lambda function and copy the lambda code to S3. The result is a modified CloudFormation script that can be deployed.aws cloudformation package --template-file process-image.yaml --s3-bucket arc326l8836-deploy \ --s3-prefix lambda-deploy --output-template-file process-image.packaged.yaml - Deploy the packaged template:

This will deploy the packaged script from the previous step. It will take a minute or two to complete. Monitor the progress hereaws cloudformation deploy --stack-name arc326l8836-lambdas --template-file process-image.packaged.yaml \ --parameter-overrides ESDomainEndpoint=search-arc326l8836search-ywozvbuwaxk7mnhzvdq67wntxu.us-east-1.es.amazonaws.com \ ParentStack=arc326l8836 --capabilities CAPABILITY_NAMED_IAM --region us-east-1 - Click on arc326l8836-lambdas.

- Once the stack is in the state 'CREATE_COMPLETE', look at the outputs and capture the value of the ApiGateway key:

- Api Gateway Id:

Optional: Explore Lambda Function Code (expand for details)

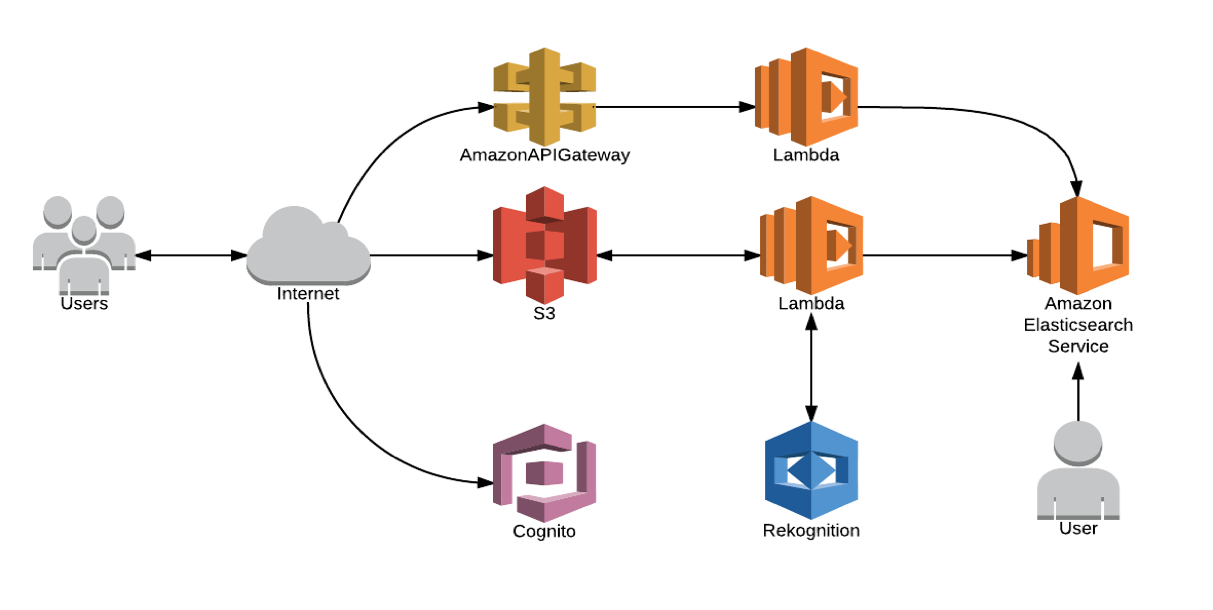

The entry point to this code is the exports.handler function. S3 will post a JSON document in the event parameter, which the function extracts the object key and bucket name for the S3 bucket. The image is resized to the thumbnail size and a document is created that is posted to elasticsearch. It is important to note that the postDocumentToES function will include the SigV4 signiture that Amazone Elasticsearch Service uses to authenticate the request.

var Promise = require("bluebird");

var AWSXRay = require('aws-xray-sdk');

var AWS = AWSXRay.captureAWS(require('aws-sdk'));

var fs = require('fs');

var elasticsearch = require('./elasticsearch.js')

var crypto = require('crypto');

'use strict';

AWS.Config.credentials.refresh();

const s3 = new AWS.S3({ region: 'us-east-1' });

const rekognition = new AWS.Rekognition({ apiVersion: '2016-06-27', region: 'us-east-1' });

const randomstring = require("randomstring");

var gm = require('gm').subClass({

imageMagick: true

});

AWS.Config.credentials = new AWS.EnvironmentCredentials('AWS');

var metadata = Promise.promisify(require('im-metadata'));

Promise.promisifyAll(gm.prototype);

/**

* This event fires when an object gets added to s3.

*/

exports.handler = (event, context, callback) => {

// extract the bucket and key from the json document

const bucket = event.Records[0].s3.bucket.name;

const key = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, ' '));

var hashKey = crypto.createHash('md5').update(key).digest('hex');

const params = {

Bucket: bucket,

Key: key

};

// Use the temp file to store the file and thumbnail

var basefilemame = randomstring.generate(10);

var filename = '/tmp/' + basefilemame + '.jpg';

var thumbFilename = '/tmp/' + basefilemame + '_thumb.jpg';

var elasticDocument = {

filename: filename

};

var throwError = false;

if (throwError) {

callback("This is a contrived error. Set throwError = false to fix;")

return;

}

var subsegment = null;

// Retrieve the image from S3, write the image to the temp folder, and extract the metadata

s3.getObject(params).promise()

.then(function(data) {

subsegment = createSubsegment('write image');

fs.writeFileSync(filename, data.Body);

subsegment = closeSubsegment(subsegment);

subsegment = createSubsegment('read metadata');

return metadata(filename, { exif: true })

})

// The imageData will contain the EXIF metadata tags, and populate them into the elasticsearch json document

.then(function(imageData) {

subsegment = closeSubsegment(subsegment);

elasticDocument.url = event.Records[0].s3.object.key;

elasticDocument.thumbnail = event.Records[0].s3.object.key.replace('upload/', 'thumbnail/');

elasticDocument.size = imageData.size;

elasticDocument.format = imageData.format;

elasticDocument.colorspace = imageData.colorspace;

elasticDocument.height = imageData.height;

elasticDocument.width = imageData.width;

elasticDocument.orientation = imageData.orientation;

for (var k in imageData.exif) elasticDocument[k] = imageData.exif[k];

// Use Rekognition to detect scene attributes on the image

var params = {

Image: {

S3Object: {

Bucket: bucket,

Name: key

}

},

MaxLabels: 123,

MinConfidence: 70

};

return rekognition.detectLabels(params).promise();

})

// Add the labels to the elasticsearch document

.then(function(rekognitionData) {

elasticDocument.labels = [];

for (var i = 0; i < rekognitionData.Labels.length; i++) {

elasticDocument.labels.push(rekognitionData.Labels[i].Name);

}

// Post the document to Elasticsearch

return elasticsearch.postDocumentToES(hashKey, elasticDocument);

})

// Create the thumbnail

.then(function(esData) {

console.log(esData);

subsegment = createSubsegment('create thumbnail');

return gm(filename).thumbAsync(80, 80, thumbFilename, 95);

})

// Copy the thumbnail up to s3

.then(function(gmData) {

subsegment = closeSubsegment(subsegment);

var photoKey = key.replace('upload/', 'thumbnail/');

var file = fs.readFileSync(thumbFilename);

return s3.upload({

Bucket: bucket,

Key: photoKey,

Body: file,

ACL: 'public-read'

}).promise();

})

// return

.then(function(s3Upload) {

// If this was processed off of the DLQ, this would be the logical step to DeleteMessage and pass in the message handle.

callback(null, "success");

})

.catch(function(err) {

subsegment = closeSubsegment(subsegment);

callback(err);

});

}

function createSubsegment(name) {

var currentSegment = AWSXRay.getSegment();

if (currentSegment != undefined) {

return currentSegment.addNewSubsegment(name)

} else {

return null;

}

}

function closeSubsegment(segment) {

if (segment != null) {

segment.close()

}

return null;

}

Optional: Explore Cloud Formation Template

This cloud formation template creates the lambda functions, API Gateway endpoints, and S3 bucket the application uses.

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::Serverless-2016-10-31

Description: The template creates the roles, buckets, and elasticsearch domain for

the rekognition to elasticsearch demo

Parameters:

ESDomainEndpoint:

Type: String

Description: The endpoint of the Elasticsearch domain.

ParentStack:

Type: String

Description: The name of the parent stack for this template

Resources:

S3Bucket:

Type: AWS::S3::Bucket

Properties:

AccessControl: PublicRead

BucketName: !Join ['', [!Ref 'ParentStack', '-workshop']]

CorsConfiguration:

CorsRules:

- AllowedHeaders:

- '*'

AllowedMethods:

- GET

- POST

- PUT

AllowedOrigins:

- '*'

ExposedHeaders:

- ETag

MaxAge: 3000

WebsiteConfiguration:

IndexDocument: index.html

ErrorDocument: error.html

S3BucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket: !Ref 'S3Bucket'

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal: '*'

Action: s3:GetObject

Resource: !Join ['', ['arn:aws:s3:::', !Ref S3Bucket, /*]]

DLQ:

Type: "AWS::SQS::Queue"

Properties:

DelaySeconds: 10

QueueName: !Sub ${ParentStack}-process-image-dlq

VisibilityTimeout: 60

LambdaFunction:

Type: AWS::Serverless::Function

Properties:

Handler: index.handler

Runtime: nodejs6.10

MemorySize: 512

Timeout: 60

FunctionName: !Sub ${ParentStack}-process-image

Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole'

CodeUri: lambda-function

Tracing: Active

DeadLetterQueue:

Type: SQS

TargetArn: !GetAtt 'DLQ.Arn'

Environment:

Variables:

ELASTICSEARCH_ENDPOINT: !Ref 'ESDomainEndpoint'

Events:

PhotoUpload:

Type: S3

Properties:

Bucket: !Ref 'S3Bucket'

Events: s3:ObjectCreated:*

Filter:

S3Key:

Rules:

- Name: prefix

Value: upload/

PingFunction:

Type: AWS::Serverless::Function

Properties:

Handler: ping.handler

Runtime: nodejs6.10

MemorySize: 1536

Timeout: 3

FunctionName: !Sub ${ParentStack}-LambdaServiceRole-ping

Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole'

CodeUri: lambda-function

Tracing: Active

Environment:

Variables:

ELASTICSEARCH_ENDPOINT: !Ref 'ESDomainEndpoint'

Events:

GetResource:

Type: Api

Properties:

Path: /ping

Method: get

RestApiId: !Ref ImageApi

SearchImages:

Type: AWS::Serverless::Function

Properties:

Handler: search.handler

Runtime: nodejs6.10

MemorySize: 128

Timeout: 3

FunctionName: !Sub ${ParentStack}-LambdaServiceRole-search

Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole'

CodeUri: lambda-function

Tracing: Active

Environment:

Variables:

ELASTICSEARCH_ENDPOINT: !Ref 'ESDomainEndpoint'

Events:

GetResource:

Type: Api

Properties:

Path: /images

Method: get

RestApiId: !Ref ImageApi

LoadTester:

Type: AWS::Serverless::Function

Properties:

Handler: load.handler

Runtime: nodejs6.10

MemorySize: 1536

Timeout: 240

FunctionName: !Sub ${ParentStack}-LoadTester

Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole'

CodeUri: lambda-function

CognitoApiPolicy:

Type: AWS::IAM::ManagedPolicy

Properties:

ManagedPolicyName: !Sub ${ParentStack}-lambdas-CognitoApiPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Deny

Action:

- 'execute-api:Invoke'

Resource:

- !Sub arn:aws:execute-api:${AWS::Region}:${AWS::AccountId}:${ImageApi}/prod/GET/ping

- Effect: Allow

Action:

- 'execute-api:Invoke'

Resource:

- !Sub arn:aws:execute-api:${AWS::Region}:${AWS::AccountId}:${ImageApi}/prod/GET/images

- Effect: Allow

Action:

- 'lambda:InvokeFunction'

Resource:

- !GetAtt 'LoadTester.Arn'

Description: elasticsearch and rekognition lambda role

Roles:

- !Sub ${ParentStack}-CognitoUnauthRole

ImageApi:

Type: AWS::Serverless::Api

Properties:

StageName: prod

DefinitionBody:

swagger: "2.0"

securityDefinitions:

sigv4:

type: "apiKey"

name: "Authorization"

in: "header"

x-amazon-apigateway-authtype: "awsSigv4"

info:

version: "1.0"

title: !Ref 'AWS::StackName'

paths:

/images:

get:

security:

- sigv4: []

parameters:

- name: search

in: query

description: The string to search for

required: true

schema:

type: string

responses: {}

x-amazon-apigateway-integration:

uri: !Sub arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${SearchImages.Arn}/invocations

passthroughBehavior: "when_no_match"

httpMethod: "POST"

type: "aws_proxy"

options:

consumes:

- application/json

produces:

- application/json

responses:

'200':

description: 200 response

schema:

$ref: "#/definitions/Empty"

headers:

Access-Control-Allow-Origin:

type: string

Access-Control-Allow-Methods:

type: string

Access-Control-Allow-Headers:

type: string

x-amazon-apigateway-integration:

responses:

default:

statusCode: 200

responseParameters:

method.response.header.Access-Control-Allow-Methods: "'DELETE,GET,HEAD,OPTIONS,PATCH,POST,PUT'"

method.response.header.Access-Control-Allow-Headers: "'Content-Type,Authorization,X-Amz-Date,X-Api-Key,X-Amz-Security-Token'"

method.response.header.Access-Control-Allow-Origin: "'*'"

passthroughBehavior: when_no_match

requestTemplates:

application/json: "{\"statusCode\": 200}"

type: mock

/ping:

get:

responses: {}

security:

- sigv4: []

x-amazon-apigateway-integration:

uri: !Sub arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${PingFunction.Arn}/invocations

passthroughBehavior: "when_no_match"

httpMethod: "POST"

type: "aws_proxy"

options:

consumes:

- application/json

produces:

- application/json

responses:

'200':

description: 200 response

schema:

$ref: "#/definitions/Empty"

headers:

Access-Control-Allow-Origin:

type: string

Access-Control-Allow-Methods:

type: string

Access-Control-Allow-Headers:

type: string

x-amazon-apigateway-integration:

responses:

default:

statusCode: 200

responseParameters:

method.response.header.Access-Control-Allow-Methods: "'DELETE,GET,HEAD,OPTIONS,PATCH,POST,PUT'"

method.response.header.Access-Control-Allow-Headers: "'Content-Type,Authorization,X-Amz-Date,X-Api-Key,X-Amz-Security-Token'"

method.response.header.Access-Control-Allow-Origin: "'*'"

passthroughBehavior: when_no_match

requestTemplates:

application/json: "{\"statusCode\": 200}"

type: mock

Outputs:

ApiGateway:

Description: The arn of the api gateway

Value: !Ref 'ImageApi'Test the lambda function

We'll test the lambda function through the console

- Download this file: Image1

- Open the S3 Bucket Console

- Upload a sample image - IMG_0200.jpg

- Open the AWS Lambda console

- Click the monitoring tab

- Click on View logs in CloudWatch. You should see a successful record. If you check the thumbnail folder in s3, you should see IMG_0200.jpg there.

- Return to the AWS Lambda console

- Configure the 'Select a Test Event' for the Lambda Function by clicking the dropdown and clicking 'Configure Test Events'.

JSON

You can test the lambda function in the console by passing in the JSON event that triggers the lambda function. Configure this test into your lambda function by clicking 'Configure Test' on the upper right of the screen. Provide a test name and paste the snippet below as the test data.

{ "Records": [ { "eventVersion": "2.0", "eventTime": "1970-01-01T00:00:00.000Z", "requestParameters": { "sourceIPAddress": "127.0.0.1" }, "s3": { "configurationId": "testConfigRule", "object": { "eTag": "0123456789abcdef0123456789abcdef", "sequencer": "0A1B2C3D4E5F678901", "key": "upload/IMG_0200.jpg", "size": 1024 }, "bucket": { "arn": "arn:aws:s3:::arc326l8836-workshop", "name": "arc326l8836-workshop", "ownerIdentity": { "principalId": "EXAMPLE" } }, "s3SchemaVersion": "1.0" }, "responseElements": { "x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH", "x-amz-request-id": "EXAMPLE123456789" }, "awsRegion": "us-east-1", "eventName": "ObjectCreated:Put", "userIdentity": { "principalId": "EXAMPLE" }, "eventSource": "aws:s3" } ] }

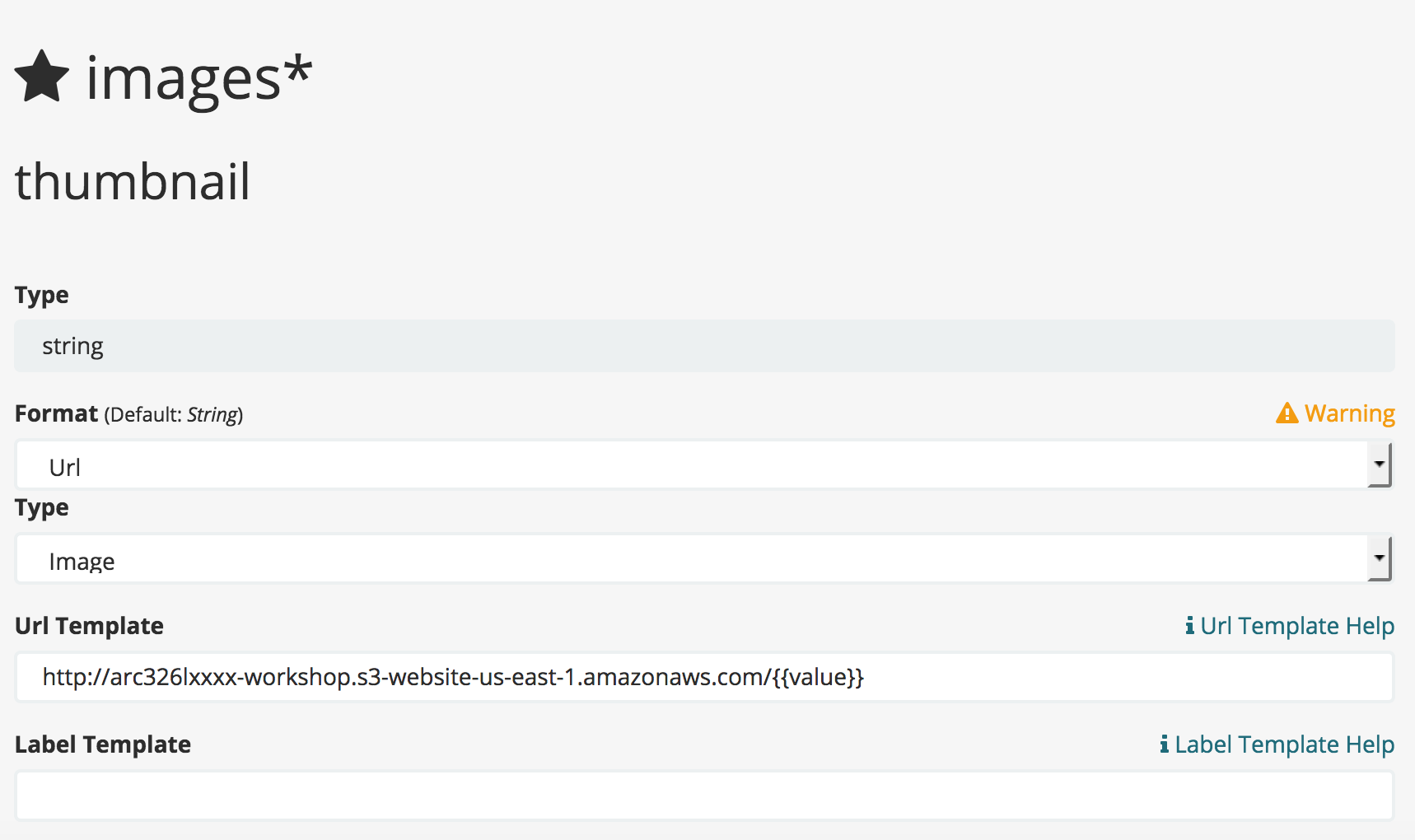

Configure Kibana

Now, we can configure Kibana in addition to view the images

- Open Kibana

- Deselect 'Index contains time based events'

- Add the pattern 'images*'

- Click 'Create'

- Filter for 'Thumbnail', and click 'Edit' (It is the pencil icon).

- Format: Url

- Type: Image

- Url Template:

http://arc326l8836-workshop.s3-website-us-east-1.amazonaws.com/{{value}} - Label Template: <Empty String>

- Click Update Field

- Click 'Discover' on the left menu

Deploy the Application Website Function

The application is a single page application (SPA) based in the Vue framework. It will be hosted from s3 using the website hosting feature of S3. Additional Info

The bucket has already been configured for website hosting, but only to the IP address assocated with this machine. Since this demo in not available to the public, IP changes on your machine will render the site inaccessible.

Build and deploy the application

- Return to your bastion host to continue deploying the website.

- Configure the sample application:

Note: the sed command will replace the tokens embedded in the code with their configuration settings.cd ~/website sed -i -e 's/COGNITO_ITENTITY_POOL/us-east-1:8afea265-732a-4448-a1c7-8119d739a1ce/g' src/components/conf.json sed -i -e 's/USER_POOL_ID/us-east-1_877wP1DvS/g' src/components/conf.json sed -i -e 's/COGNITO_CLIENT_ID/4l0cj2d2hn8703n78be4h1gh7p/g' src/components/conf.json sed -i -e 's/STACK_NAME/arc326l8836/g' src/components/conf.json sed -i -e 's/APIGATEWAY_ID/tketg11hsh/g' src/components/conf.jsonHow do you know to do this?

This the configuration of your application. For the purposes of the workshop, the JSON file gets the configuration values directly. An automated build process would pull the correct file down for the environment and build the code. - Install nodejs dependencies:

npm install - Builds the website:

npm run build - Deploys the static website to S3:

aws s3 sync dist/ s3://arc326l8836-workshop - Open the sample web application

Test the Application Website Function

Initially, there is no requirement to sign in, as the system is open to unauthenticated users.

The web application has two components, the image uploader and the image search screen. Since the index is empty, the search will not yield any results. Let's load some sample images.

Load Sample Images

The same file can be uploaded multiple times, it will appear multiple times in the search index.

- Save these files to disk: Lighthouse 1, Lighthouse 2, Fire truck

- Upload three files

- Below the upload, Search for Lighthouse in the search box. If it doesn't appear right away, wait a few seconds.

Well-Architected Review

For the rest of this workshop, we will assess the architecture against the AWS Well-Architected framework. While there isn't enough time to do a complete review, we will highlight specific areas that are relevant to serverless architectures. For more details, review the Well-Architected Framework whitepaper and the Serverless Lens.General Serverless Principles

The Well-Architected Framework identifies a set of general design principles to facilitate good design in the cloud for serverless applications:- Speedy, simple, singular: Functions are concise, short, single purpose and their environment may live up to their request lifecycle. Transactions are efficiently cost aware and thus faster executions are preferred.

- Think concurrent requests, not total requests: Serverless applications take advantage of the concurrency model, and tradeoffs at the design level are evaluated based on concurrency.

- Share nothing: Function runtime environment and underlying infrastructure are short-lived, therefore local resources such as temporary storage are not guaranteed. State can be manipulated within a state machine execution lifecycle, and persistent storage is preferred for highly durable requirements.

- Assume no hardware affinity: Underlying infrastructure may change. Leverage code or dependencies that are hardware-agnostic as CPU flags, for example, may not be available consistently.

- Orchestrate your application with state machines, not functions: Chaining Lambda executions within the code to orchestrate the workflow of your application results in a monolithic and tightly coupled application. Instead, use a state machine to orchestrate transactions and communication flows.

- Use events to trigger transactions: Events such as writing a new Amazon S3 object or an update to a database allow for transaction execution in response to business functionalities. This asynchronous event behavior is often consumer agnostic and drives just-in-time processing to ensure lean service design.

- Design for failures and duplicates: Operations triggered from requests/events must be idempotent as failures can occur and a given request/event can be delivered more than once. Include appropriate retries for downstream calls.

Security

The security of any application is a primary concern. Security assessments cover many dimensions:

- Authentication: Who is using the system

- Authorization: Are the users permitted to perform this action

- Encryption at Rest: Is the data at rest safe?

- Encryption in Transit: Is the data in transit safe?

- Intrusion Detection

- Data Loss Prevention

[SEC3] How are you limiting automated access to AWS resources? (e.g. applications, scripts, and/or third party tools)

Controlling access to AWS resources consists of the authentication and authorization mechanisms in the application.Authentication

Determining the actor depends on the application that is accessing a resource. For mobile or web applications, Amazon Cognito offers an authentication system that can scale to millions of users. Cognito allows you to integrate with SAML2 identify providers, OAuth providers such as Facebook and Amazon, or creating your own custom authentication method.

Once a user authenticated, Cognito generates a set of short-lived IAM credentials that get stored on the web page or inside the mobile application.

For simplicity, this lab uses an unauthenticated user to create the IAM credentials. Unauthenticated users do not require an authentication step, but still receive a set of short-lived IAM credantials.

For any custom API endpoints, this lab uses Amazon API Gateway to expose and secure the endpoints.

With AWS API Gateway, you aren't required to use Cognito for authentication, you can use any authentication method listed below.

- AWS: Each call with be encoded with a SigV4 signiture and pass the AWS access key. This can be generated using Cognito, or generated through the AWS API. This method provides access to non-API Gateway AWS resources using the same credentials.

- API Key: Generate an API key to authenticate API

- Custom Authorizer: Write custom code to authenticate the using user headers, cookies, etc.

Authorization

Once you have a set a credentials, you have access to any AWS resources that the underlying role has a policy that allows access to that resource. That may be an API Gateway endpoint, but you can use any AWS service. It is common to write directly to S3 or SQS queues in addition to API Gateway endpoints.

Example IAM Policy

The following policy allows GET and POST access to the pets endpoint for any IAM user, role, or group that has the policy attached to it.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1507229653000",

"Effect": "Allow",

"Action": [

"execute-api:Invoke"

],

"Resource": [

"arn:aws:execute-api:us-east-1:a111111111111:h38ks93e/Prod/POST/pets",

"arn:aws:execute-api:us-east-1:a111111111111:h38ks93e/Prod/GET/pets"

]

}

]

}Lambda Credentials

Lambda functions run under an IAM role assigned to the function. When the function executes, it is assigned a set of short-lived IAM credentials that allow it to authenticate to other AWS resources such as S3, DynamoDb, or API Gateway endpoints. Additional Info

Lab Instructions

In this lab, we will demonstrate the authorization capabilities of IAM.

- Open your sample application page.

- Click the 'Test API Endpoint' button. An error will be thrown because the role assigned to this page does not have access to that API gateway endpoint.

- Go to the AWS IAM Console for the unauthenticated cognito role.

- Click on the at the policy 'arc326l8836-lambdas-CognitoApiPolicy' and click 'Edit policy'.

- The policy editor will open up, click the 'JSON tab'

- Change Deny to Allow. Click Save.

- Refresh the sample application page

- Click the 'Test API Endpoint' button. You should see a count of the number of images in the Amazon Elasticsearch Service cluster. If it doesn't work right away, wait 15 seconds, refresh and try again.

- Extra Credit: Create an authenticated Cognito user with a login page

Supporting Documentation

- What is Cognito?

- Authentication Flow

- Cognito SDK

- Open the web application, click 'Sign In'

- Depending on your browser, you may see a warning that you are using an unsecure page. You can ignore this warning, since all of the Cognito API called are secured, just the static content is not. In the Additonal Ideas section, you can learn about using cloudfront to cache the content on the CDN and provide HTTPS support.

- You will have to register a user with a real email address. Do this by clicking 'Register' on the login screen.

- You will receive a confirmation email from Cognito to ensure the validity of the email. Enter the code in the confirmation textbox that appears after you press 'Register'.

- Log Into the web application.

- Do the integrations to API Gateway work? Why is everything broken? Which Role are we assuming?

Hint...

Attach the IAM policies associated to the unauthenticated role to the authenticated role. Detach the policies from the unauthenticated role. Now unauthenticated users will be denied access!

[SEC11] How are you encrypting and protecting your data in transit?

- Using the browser developer tools, verify all API calls are over HTTPS.

Reliability

[REL 7] How does your system withstand component failures?

While the serverless architecture is very reliable, much of reliability must come from the application itself. Resiliant applications will continue to operate in a degraded capacity in the case of a component failure. In the following example, we will intentially break the connection between lambda and the Amazon Elasticsearch cluster.

Lab Instructions

- Go to your SSH session

- Break your lambda function:

cd ~/process-image sudo chmod +x break-lambda.sh ./break-lambda.shWhat's this doing???

The shell script uses sed to change the code to always throw an exception.

sed -i -e 's/throwError = false/throwError = true/g' lambda-function/index.jsThe lambda code will execute:

var throwError = true; if (throwError) { callback({error: "This is a contrived error. Set throwError = false to fix;"}) return; } - Package the broken function:

aws cloudformation package --template-file process-image.yaml --s3-bucket arc326l8836-deploy \ --s3-prefix lambda-deploy --output-template-file process-image.packaged.yaml - Deploy the broken function:

aws cloudformation deploy --stack-name arc326l8836-lambdas --template-file process-image.packaged.yaml \ --parameter-overrides ESDomainEndpoint=search-arc326l8836search-ywozvbuwaxk7mnhzvdq67wntxu.us-east-1.es.amazonaws.com \ ParentStack=arc326l8836 --capabilities CAPABILITY_NAMED_IAM --region us-east-1

This may take a minute or two to complete - Right Click 'Save Link As' Image 488 to download a random sample image.

- Upload some images using the sample web application, this will appear successful

- Check the lambda logs. It may take a couple of minutes for the errors to make it into the logs.

- Lambda will try twice, so you will see 2 entries in the log., then send the message to the Dead Letter Queue (DLQ) is configured.

- What happened? Check the SQS Console for the Dead Letter Queue.

- Search for

arc326l8836-process-image-dlqin the search window. You should see some messages appear in the queue after a couple of tries in lambda. It usually takes about 2-5 minutes for the queue items to appear.

Additional Info on dead letter queues - Go back to your SSH window

- Fix the lambda function:

sudo chmod +x fix-lambda.sh ./fix-lambda.sh

What's this doing now???

The shell script uses sed to disable the code that always throw an exception.

sed -i -e 's/throwError = true/throwError = false/g' lambda-function/index.jsThe lambda code will execute:

var throwError = false; if (throwError) { callback({error: "This is a contrived error. Set throwError = false to fix;"}) return; } - Package the fixed function:

aws cloudformation package --template-file process-image.yaml --s3-bucket arc326l8836-deploy \ --s3-prefix lambda-deploy --output-template-file process-image.packaged.yaml - Deploy the fixed function:

aws cloudformation deploy --stack-name arc326l8836-lambdas --template-file process-image.packaged.yaml \ --parameter-overrides ESDomainEndpoint=search-arc326l8836search-ywozvbuwaxk7mnhzvdq67wntxu.us-east-1.es.amazonaws.com \ ParentStack=arc326l8836 --capabilities CAPABILITY_NAMED_IAM --region us-east-1 - Extra Credit: reprocess the DLQ using a lambda function triggered by cloudwatch log events.

Supporting Documentation

- Create a schedule triggered lambda function

- Sending and receiving SQS messages in Javascript.

- The lambda function should call the 'recievemessage API'. Keep polling until the queue is empty or your lambda function times out.

- You don't need to create a new process-image function, you can call the same one. If you call it async, make sure you pass in the message handle. If you call synchonously, consider the impacts on lambda concurrency, cost, and scalibility.

The result of this failure is not a total system failure. Users can still log into the application and upload their photos, but there will be a backlog of images that require processing. Once the problem is resolved, the backlog of images will be processed scaling up using lambda.

A second dimension to reliability is throttling requests. One consideration is the elasticity of the downstream systems from the lambda functions. Even though the lambda functions can scale rapidly, the downstream dependencies may not. In this case, you are depending on S3, Rekognition, and the ElasticSearch cluster.

There are two ways to handle the throttling:

- Throttle the input to the system. In our example, this is handled by API gateway search function

- Queue the work prior to the constraint. In out example, if the Elasticsearch cluster returns an error, the function is placed on the dead letter queue for later processing.

Extra Credit: Throttle the S3 update lambda function [Optional]

- Review Securing Serverless Architectures

- Create an API gateway endpoint that exposes the lambda process-images lambda function.

- Create a new lambda function that processes the s3 create event. The lambda function calls the API gateway endpoint that triggers process-images. If the system returns a HTTP code of 429, the call has been throttled. Return an error and the DLQ should handle it

[REL3] How does your system adapt to changes in demand?

A scalable system can provide elasticity to add and remove resources automatically so that they closely match the current demand at any given point in time.

Best practices:

- Automated Scaling - Computer scaling handled by lambda. What about downstream systems?

- Load Test

Lab Instructions

We have supplied a load tester using AWS Lambda, of course. Because it can scale quickly and run concurrently, lambda functions are an excellent tool for running load tests. In the case, the lambda function will randomly pull an image from a set of sample images and upload it to Amazon S3. This will trigger the image processing workflow.

Through testing, each load test lambda function can support up to 10 uploads per second on a consistent basis. This will fluctuate depending on the load test being run.

- Open the web application

- Find the section 'Load Test'

- Run a load test with various parameters. The default limit is 1,000 concurrent lambda functions, so you can test this by running more than 125 requests per second. This is because the typical process-image function takes about 8 seconds to complete, meaning there are about 8*125 lambda functions running at any given time. More information on concurrent execution.

- View the results in in the monitoring section..

Performance Efficiency

[PERF 7] How do you monitor your resources post-launch to ensure they are performing as expected?

Lab Instructions

- Extra Credit: Make a cloudwatch dashboard for your load test

- Don't put the lambda functions in your VPC unless you need to. It will increase you cold start time and service limits for ENIs and other network constrains will impact your ability to scale.

- Reduce the size of your code deployable

- Take advantage of /tmp space

- Reuse database connections and static initialization of cacheable data

- For more information, review the lambda best practices

Cost Optimization

[COST 2] Have you sized your resources to meet your cost targets?

Lambda is a very cost-effective solution, but at scale, costs can be a consideration. By opimizing the code to use only the CPU and Memory required, you can have a significant impact on the overall cost to serve.

- Using the cloudwatch metrics, enter in the average processing time for the lambda function (in ms):

- Enter the amount of memory reserved for the function

- If you are processing 200 million images, the cost to serve will be: $13369.13

- Now, go to the Lambda Console and change the memory to 1024 MB in the Basic Settings section.

- Test the lambda function again to get the time to process the image

- Replace the values above and compare which is more cost effective

Operations Excellence

[OPS 2] How are you doing configuration management for your workload?

In this lab, we used the Serverless Application Model (SAM), which is an extension of CloudFormation. It allows you to check in your infrastucture code into a version control system and use automation to deploy the code. The lab has you enter in the command line commands to get a sense for what is happening behind the scenes. However, in a typical development scenario, you would commit the CloudFormation code into your version control system and the continuous integration / continuous delivery system would deploy the changes and run validation tests.

Extra Credit: CI/CD Pipeline

- Create a code commit project. Code commit getting started.

- Create a AWS Code Pipeline job to deploy the this lab. Code Pipeline Documentation

- Create a code pipeline job that uses a cloudformation deployment action.

Lab Instructions

[OPS 4] How do you monitor your workload to ensure it is operating as expected?

Cloudwatch has the capability to notify you when metrics reach certain thresholds. In this case, it would make sense

- Open Cloud Watch

- Click Alarms on the left side menu

- Click 'Create Alarm'

- In 'Browse Metrics' type 'arc326l8836-process-image' and press enter

- Select the metric with the FunctionName of arc326l8836-process-images and a Metric Name of Errors

- Click Next in the lower right

- Name the alarm Process Image Errors

- Set the threshold to Whenever errors is >=10 errors for 1 consecutive period.

- Under 'Additional Settings': Treat Missing Data as 'Good'.

- Under Actions, choose Send Notification To and click New List

- Provide a name for the topic: arc326l8836-errors

- Provide your email address in the email list. You will need to response to the verification email for it to work.

- Click Create Alarm

- Extra Credit: Use the section above to break the lambda function, and run a load test

Application Performance Monitoring

AWS X-Ray gives teams visibility into the performance and service dependencies and is fully integrated into AWS Lambda.

Click here for more information on x-ray and lamnda

- Go to the Lambda Console and under the 'Debugging and error handling' section, verify the 'Enable Active Tracing' is selected.

- Visit the X-ray console. View the service map to view the traces in the application.

- Adjust the memory allocated to the lambda function and review the impact on the X-Ray traces.

- How could you optimize the cost and performance of the function? Which segments are affected by the lamba function's memory allocation and which are not?

Other Considerations

- Limits Monitoring. For those with Business or Enterprise Support, look at the AWS Limit Monitor.

- Infrastructure as Code. AWS Serverless Application Model.. This workshop used the AWS Serverless Application Model to deploy all the changes to the web application. This file,

process-image.yamlincludes defining the lambda functions, API Gateway endpoints, SQS Queue, and S3 buckets. Pay particulat attention to the security definitions in the ImageApi section. It includes the specification of the sigv4 authentication in thesecurityDefinitionsentry.process-image.yaml

AWSTemplateFormatVersion: '2010-09-09' Transform: AWS::Serverless-2016-10-31 Description: The template creates the roles, buckets, and elasticsearch domain for the rekognition to elasticsearch demo Parameters: ESDomainEndpoint: Type: String Description: The endpoint of the Elasticsearch domain. ParentStack: Type: String Description: The name of the parent stack for this template Resources: S3Bucket: Type: AWS::S3::Bucket Properties: AccessControl: PublicRead BucketName: !Join ['', [!Ref 'ParentStack', '-workshop']] CorsConfiguration: CorsRules: - AllowedHeaders: - '*' AllowedMethods: - GET - POST - PUT AllowedOrigins: - '*' ExposedHeaders: - ETag MaxAge: 3000 WebsiteConfiguration: IndexDocument: index.html ErrorDocument: error.html S3BucketPolicy: Type: AWS::S3::BucketPolicy Properties: Bucket: !Ref 'S3Bucket' PolicyDocument: Version: '2012-10-17' Statement: - Effect: Allow Principal: '*' Action: s3:GetObject Resource: !Join ['', ['arn:aws:s3:::', !Ref S3Bucket, /*]] DLQ: Type: "AWS::SQS::Queue" Properties: DelaySeconds: 10 QueueName: !Sub ${ParentStack}-process-image-dlq VisibilityTimeout: 60 LambdaFunction: Type: AWS::Serverless::Function Properties: Handler: index.handler Runtime: nodejs6.10 MemorySize: 512 Timeout: 60 FunctionName: !Sub ${ParentStack}-process-image Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole' CodeUri: lambda-function Tracing: Active DeadLetterQueue: Type: SQS TargetArn: !GetAtt 'DLQ.Arn' Environment: Variables: ELASTICSEARCH_ENDPOINT: !Ref 'ESDomainEndpoint' Events: PhotoUpload: Type: S3 Properties: Bucket: !Ref 'S3Bucket' Events: s3:ObjectCreated:* Filter: S3Key: Rules: - Name: prefix Value: upload/ PingFunction: Type: AWS::Serverless::Function Properties: Handler: ping.handler Runtime: nodejs6.10 MemorySize: 1536 Timeout: 3 FunctionName: !Sub ${ParentStack}-LambdaServiceRole-ping Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole' CodeUri: lambda-function Tracing: Active Environment: Variables: ELASTICSEARCH_ENDPOINT: !Ref 'ESDomainEndpoint' Events: GetResource: Type: Api Properties: Path: /ping Method: get RestApiId: !Ref ImageApi SearchImages: Type: AWS::Serverless::Function Properties: Handler: search.handler Runtime: nodejs6.10 MemorySize: 128 Timeout: 3 FunctionName: !Sub ${ParentStack}-LambdaServiceRole-search Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole' CodeUri: lambda-function Tracing: Active Environment: Variables: ELASTICSEARCH_ENDPOINT: !Ref 'ESDomainEndpoint' Events: GetResource: Type: Api Properties: Path: /images Method: get RestApiId: !Ref ImageApi LoadTester: Type: AWS::Serverless::Function Properties: Handler: load.handler Runtime: nodejs6.10 MemorySize: 1536 Timeout: 240 FunctionName: !Sub ${ParentStack}-LoadTester Role: !Sub 'arn:aws:iam::${AWS::AccountId}:role/${ParentStack}-LambdaServiceRole' CodeUri: lambda-function CognitoApiPolicy: Type: AWS::IAM::ManagedPolicy Properties: ManagedPolicyName: !Sub ${ParentStack}-lambdas-CognitoApiPolicy PolicyDocument: Version: '2012-10-17' Statement: - Effect: Deny Action: - 'execute-api:Invoke' Resource: - !Sub arn:aws:execute-api:${AWS::Region}:${AWS::AccountId}:${ImageApi}/prod/GET/ping - Effect: Allow Action: - 'execute-api:Invoke' Resource: - !Sub arn:aws:execute-api:${AWS::Region}:${AWS::AccountId}:${ImageApi}/prod/GET/images - Effect: Allow Action: - 'lambda:InvokeFunction' Resource: - !GetAtt 'LoadTester.Arn' Description: elasticsearch and rekognition lambda role Roles: - !Sub ${ParentStack}-CognitoUnauthRole ImageApi: Type: AWS::Serverless::Api Properties: StageName: prod DefinitionBody: swagger: "2.0" securityDefinitions: sigv4: type: "apiKey" name: "Authorization" in: "header" x-amazon-apigateway-authtype: "awsSigv4" info: version: "1.0" title: !Ref 'AWS::StackName' paths: /images: get: security: - sigv4: [] parameters: - name: search in: query description: The string to search for required: true schema: type: string responses: {} x-amazon-apigateway-integration: uri: !Sub arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${SearchImages.Arn}/invocations passthroughBehavior: "when_no_match" httpMethod: "POST" type: "aws_proxy" options: consumes: - application/json produces: - application/json responses: '200': description: 200 response schema: $ref: "#/definitions/Empty" headers: Access-Control-Allow-Origin: type: string Access-Control-Allow-Methods: type: string Access-Control-Allow-Headers: type: string x-amazon-apigateway-integration: responses: default: statusCode: 200 responseParameters: method.response.header.Access-Control-Allow-Methods: "'DELETE,GET,HEAD,OPTIONS,PATCH,POST,PUT'" method.response.header.Access-Control-Allow-Headers: "'Content-Type,Authorization,X-Amz-Date,X-Api-Key,X-Amz-Security-Token'" method.response.header.Access-Control-Allow-Origin: "'*'" passthroughBehavior: when_no_match requestTemplates: application/json: "{\"statusCode\": 200}" type: mock /ping: get: responses: {} security: - sigv4: [] x-amazon-apigateway-integration: uri: !Sub arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${PingFunction.Arn}/invocations passthroughBehavior: "when_no_match" httpMethod: "POST" type: "aws_proxy" options: consumes: - application/json produces: - application/json responses: '200': description: 200 response schema: $ref: "#/definitions/Empty" headers: Access-Control-Allow-Origin: type: string Access-Control-Allow-Methods: type: string Access-Control-Allow-Headers: type: string x-amazon-apigateway-integration: responses: default: statusCode: 200 responseParameters: method.response.header.Access-Control-Allow-Methods: "'DELETE,GET,HEAD,OPTIONS,PATCH,POST,PUT'" method.response.header.Access-Control-Allow-Headers: "'Content-Type,Authorization,X-Amz-Date,X-Api-Key,X-Amz-Security-Token'" method.response.header.Access-Control-Allow-Origin: "'*'" passthroughBehavior: when_no_match requestTemplates: application/json: "{\"statusCode\": 200}" type: mock Outputs: ApiGateway: Description: The arn of the api gateway Value: !Ref 'ImageApi'

Additional Ideas

Now that you have been introduced to the serverless image processing pipeline, think of the following enhancements:

- Host the Website on CloudFront to Cache the static content at the edge. Documentation

- Host the website on a custom domain name. Documentation

- Sentiment Analysis: Capture the emotion of any faces in the images.

- Face Recognition: Create a graph using DynamoDb and D3 showing images containing the same faces.

- Coordinate image processing with Step Functions rather than a single lambda function.

- Detect if the same image is uploaded multiple times (Hint: find any images that are already in the index by S3 Etag).

- Remove an image from the index if it was deleted from the S3 bucket. Don't forget to delete the thumbnail too.

- Filter using moderation labels with Rekognition

- Detect Text using Rekognition

- Use your imagination!!!

Show us something cool!

If you have extra time, team up with people near you and create an interesting extension to the workshop.

Don't be shy, we'd love to see your creations.

Cleanup

In your SSH window, past the following commands:

aws s3 rm --recursive s3://arc326l8836-workshop

aws s3 rm --recursive s3://arc326l8836-deploy

aws cloudformation delete-stack --stack-name arc326l8836-lambdas --region us-east-1Once those resources have been deleted, complete the cleanup by removing the stack we created at the beginning of the workshop by selecting arc326l8836 and choose Actions->Delete Stack. Link to Console